A number of new patents by Sony Interactive Entertainment have just gone public, revealing some of the potential work that Sony is experimenting with, including a PlayStation VR facial tracking patent that will track facial features to provide a more realistic experience for users.

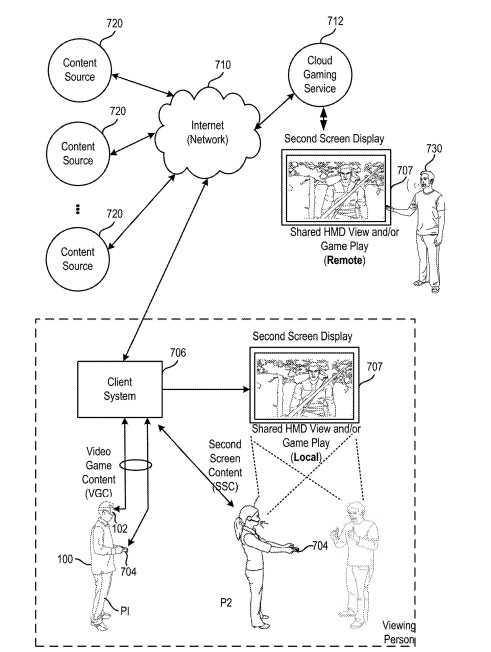

The first part of the “Integration of tracked facial features for VR users in virtual reality environments” patent discusses the ability of facial tracking that keeps tabs on facial movements. This includes the eyes and mouth movements, and in the example that the company uses it shows an onscreen avatar mimicking the same facial expressions of the HMD wearer being processed to another user who isn’t using an HMD.

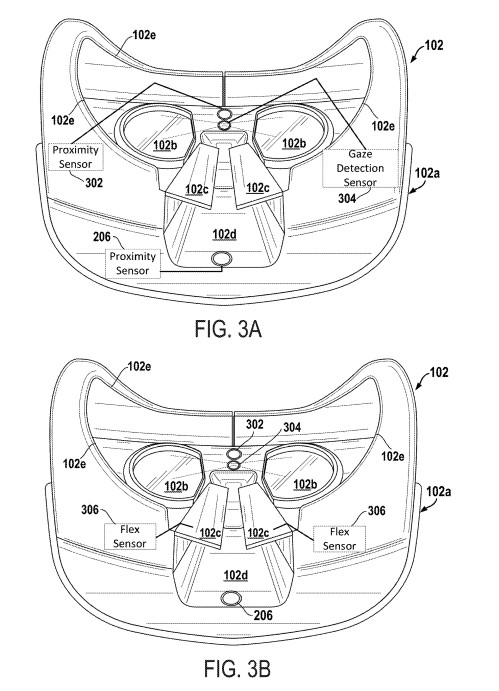

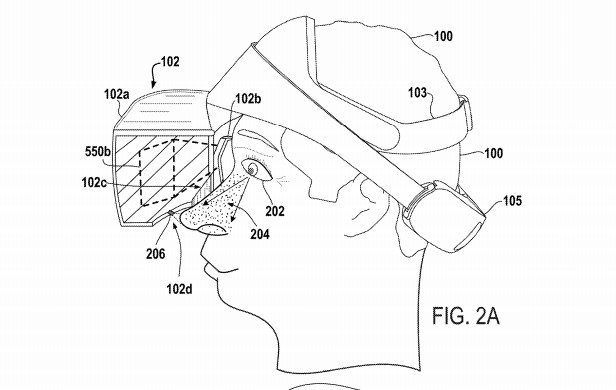

A method for rendering a virtual reality (VR) scene viewable via a head-mounted display (HMD) is provided. The method includes detecting eye gaze of a user using one or more eye gaze sensors disposed in a display housing of the HMD. And, capturing images of a mouth of the user using one or more cameras disposed on the HMD, wherein the images of the mouth include movements of the mouth. Then, the method includes generating a virtual face of the user. The virtual face includes virtual eye movement obtained from the eye gaze of the user and virtual mouth movement obtained from said captured images of the mouth. The method includes presenting an avatar of the user in the VR scene with the virtual face. The avatar of the user is viewable by another user having access to view the VR scene from a perspective that enables viewing of the avatar having the virtual face of the user. Facial expressions and movements of the mouth of the user wearing the HMD are viewable by said other user, and the virtual face of the user is presented without the HMD.

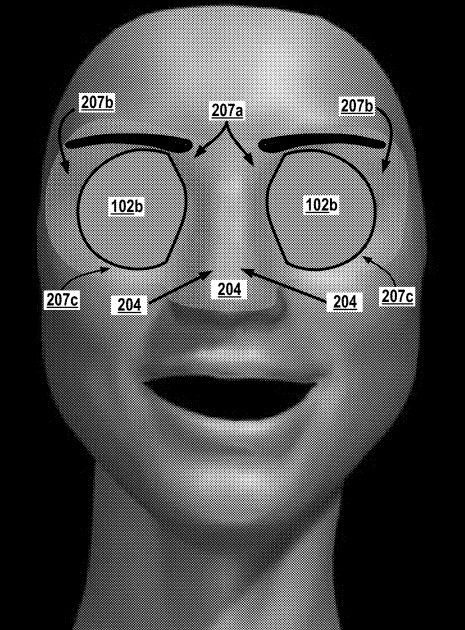

What’s more, is that the company details in the patent the ability to identify a users’ nose. Yeah, we know it’s pretty weird but from the explanation provided it does seem to make a bit of sense as to why they would as it may offer a more realistic experience for the user.

The following implementations of the present disclosure provide methods, systems, computer-readable media, and cloud systems, for rendering virtual reality (VR) views into VR scenes for presentation to a head-mounted display (HMD). One method includes sensing the position of a nose of the user when the HMD is worn by the user. The method includes identifying a model of the nose of the user based on the position that is sensed. The model of the nose having a dimension that is based on the position of the nose of the user when the HMD is worn. The method further includes rendering images to a screen of the HMD to present the VR scenes. The images being augmented to include nose image data from the model of the nose. In one example, the HMD is configured to capture facial feature expressions which are used to generate avatar faces of the user, and convey facial expressions and/or emotion.

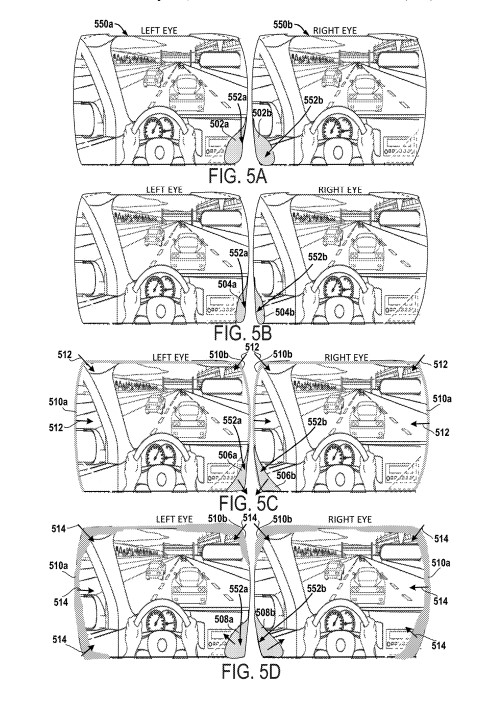

The embodiments described herein, therefore, enable systems of the HMD to track features of the user space, while wearing the HMD. The features can include identifying characteristics of the user’s nose and using that information to render at least a portion of the user’s nose in images presented to the screens of the HMD for viewing the virtual-reality content. By presenting images of the user’s nose in the images of the scenes being viewed, the images or partial images of the user’s nose will resemble the views that a user typically sees when not wearing an HMD. As such, the user’s brain viewing images will be accustomed to seeing at least part of the user’s own nose in the scenes, and the user’s brain will automatically filter out the presence of the nose.

However, by providing the user’s nose or partial views of the user’s nose in the scenes, it is possible for the user to feel a more realistic experience when viewing the virtual-reality content. If the user’s nose is not provided, at least partially in the images, the user’s appearance or perception of the virtual-reality scenes will be less than natural. As such, by providing this integration into the images, in locations where the user is expecting to see at least part of the user’s nose, the views into the virtual-reality content will be more natural, and as expected by the user’s natural brain reactions to filter out the nose. Embodiments described herein present methods for identifying the nose, characterizing the nose physical characteristics, and then generating models of the nose for presentation at least partially in the scenes.

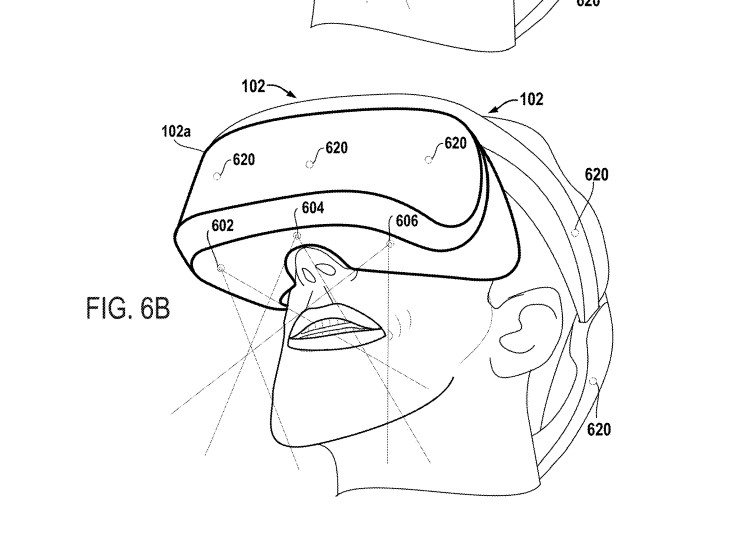

And below are some images, one showing how this may work in a game with the center point being the nose itself. You may also notice the headset (presumably PSVR) has a slightly different design than the original model. Don’t take this as an indication that this is what the new VR headset will look like should Sony continue support for it into the PlayStation 5.

The ability to track facial expressions via the headset itself is a pretty cool idea and its one that was already being tested by other VR headset companies. One notably is Veeso, who published a video back in 2016 showing just that. Unfortunately, they never meet their Kickstarter goal and the project was shortly canceled after. Facebook is also experimenting with the idea.

For those who are active VR users, is this something that will interest you? I know for us we can see functions of emote becoming far easier to perform with this kind of technology. What are your thoughts? let us know in the discussion down below!